Source: www.auntminnie.com

Author: Erik L. Ridley, AuntMinnie.com staff writer

Radiology and pathology artificial intelligence (AI) algorithms can help in diagnosing and assessing the prognosis of oral squamous cell carcinoma (SCC), according to a literature review published August 19 in JAMA Otolaryngology — Head & Neck Surgery.

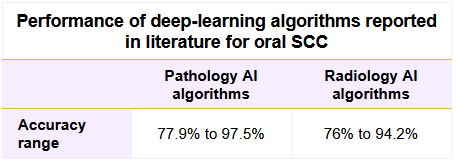

After reviewing published studies in the literature on the use of AI with pathology and radiology images in patients with oral SCC, researchers from the University of Hong Kong concluded that the technology yielded good classification accuracy.

“The successful use of deep learning in these areas has a high clinical translatability in the improvement of patient care,” wrote the authors, led by first author Chui Shan Chu and senior author Dr. Peter Thomson, PhD.

In radiology applications for oral SCC, a convolutional neural network (CNN) was able to predict disease-free survival with 80% accuracy, sensitivity, and specificity from PET images, the researchers reported. Another CNN showed lower performance — 66.9% sensitivity, 89.7% specificity, and 84% accuracy — when used on CT for predicting disease-free survival. A deep-learning algorithm also yielded 90% sensitivity for detecting lymph node metastasis from oral SCC on CT.

In addition to providing prognosis predictions, AI could help facilitate personalized treatment from CT images, according to the researchers. One model was 76% accurate for predicting xerostomia, or dry mouth, an adverse effect of radiotherapy caused by toxicity. Another study determined that radiation dose distribution is the most crucial factor for predicting toxicity.

The researchers noted that, to the best of their knowledge, no studies have utilized deep learning with MRI in oral SCC applications.

As for pathology, AI has most frequently been used to facilitate oral SCC diagnosis by classifying cell type and differentiating tumor grade on digital hematoxylin and eosin-stained tissue images. A CNN algorithm, for example, achieved 96.4% accuracy for differentiating cancer cells from six different types of nontumor cells. Another algorithm was 84.8% accurate for differentiating head and neck SCC from thyroid cancer and metastatic lymph node from breast cancer.

What’s more, other classifiers stratified oral SCC images into four classes with 97.5% accuracy and classified hematoxylin and eosin-stained oral tissue images as either normal or dysplasia with 89% accuracy. In addition, an algorithm was able to predict survival with an average accuracy of 80%.

“In oral cancer research, particularly that related to SCC, deep learning with the use of readily available clinical images derived from hematoxylin and eosin-stained pathological sections and CT-based radiography is demonstrated to have the potential to aid clinical decision-making with regard to cancer diagnosis, prognosis prediction, and treatment guidance,” the authors wrote.

Once their accuracy is validated in different patient cohorts, deep-learning algorithms could be used as a decision-support tool for clinicians, according to the researchers. More research will be needed, however, to determine how to best implement AI into the routine clinical workflow.

“To expand the application of deep learning, it can be used to infer tumor biology information based on the expression-level alterations or mutations of targetable molecules for immunotherapy and targeted therapy,” the authors wrote. “If this approach is successful, it is economical and time-saving to reveal molecular information of tumors without using additional costly molecular assays.”

Leave A Comment

You must be logged in to post a comment.